Reigning in performance of third party assets

Optimizing third party assets is simultaneously the most important and frustrating experience of speeding up websites. Even though your website might be using the latest and greatest performance optimization techniques, you have little control over how well optimized assets loaded from third party domains are.

The number of times I have spent emailing support or posting in product forums asking them to at least set a cache control header on their assets they serve! For example, here is a (non-)reply that I got from a very popular chat widget provider (at least used by 400k websites), when I told them that they can cut down their compressed JavaScript size by more than 40KB by just minifying their script. This issue has been open for over two years now and we decided to move to a different provider, but this may not be always possible.

This is a good example of how jQuery CDN started using gzip with higher levels of compression after it was pointed to them (still no brotli though :( )

paging @addyosmani @pbakaus @paul_irish: do we know folks running jQuery CDN? could we get gzip levels higher + brotli support online? :-)

— Ilya Grigorik (@igrigorik) July 30, 2018

This was a big win because the numerous websites using the jQuery script would have benefited from this optimization. In this blog post, first we will briefly go over some of the ways in which third party assets can misbehave and negatively impact the performance of your website and then we will talk about a new feature we are launching at Dexecure that gives you a bit more control over the situation.

Third party libraries are considered evil

Hey..come back, I was just joking! Of course, they aren’t all bad. Even if you are running a small website, chances are you are going to be using third party products for analytics, A/B testing, chat widgets, etc. But, here are a few ways in which having too many third party scripts in your page can be a bad thing for performance -

Single Point of Failure

Depending on the kind of asset you are loading from third party servers, they can be a Single Point of Failure (SPOF). This could especially bad, if you are loading fonts (Google fonts or Typekit for e.g.) or CSS (Font Awesome for e.g.) from a different domain. Since CSS is a render blocking resource, you run the risk of not displaying any content to your users if the CSS doesn’t load. And this isn’t a theoretical problem either. Typekit was down a few years ago. And your fonts not loading in time can lead to the Mitt Romney problem.

Improperly optimized assets

These are the three most common ways which I see third party assets are naughty when it comes to performance.

Improper caching: This is one of the easiest wins when it comes to performance and most of the popular third party resources version their assets and send a long cache duration. But, there are a long tail of resources which either don’t send a cache control header at all or cache for a very short duration.

Assets not being minified or compressed: When your library is being used by a lot of users, you owe it to them that it is properly minified, compressed with brotli, etc.

Assets not on a CDN: Recently, I found a third party library hosted at cdn.libraryname.com and which was just pointing to a single EC2 server! Understandably, library authors can’t be expected to host their libraries on a good CDN all the time. And the definition of a “good” CDN is quite subjective too (if all your users are in Indonesia, you probably want a CDN with edge servers there.)

JSManners by Andrew Betts has a more comprehensive checklist regarding this.

More domains, more setup

Each extra domain that you connect to involves a DNS lookup, setting up a TCP connection and negotiating SSL before you can start downloading from that domain. This process involves usually involves 3 - 4 round trips and can easily take 300ms - 400ms for every domain and can have a serious impact on the performance of your websites, especially on high latency connections.

TCP slow start also means that it can take some time for each connection to ramp up the window size. So each of these connections would start downloading assets slowly, even if you are on a high bandwidth connection.

Finally in a HTTP/2 world, having fewer domains matters even more. Not only does it obviate hacks like domain sharding, congestion control and HTTP/2 priorities work better too with fewer connections.

Introducing Dexecure Proxy Mode

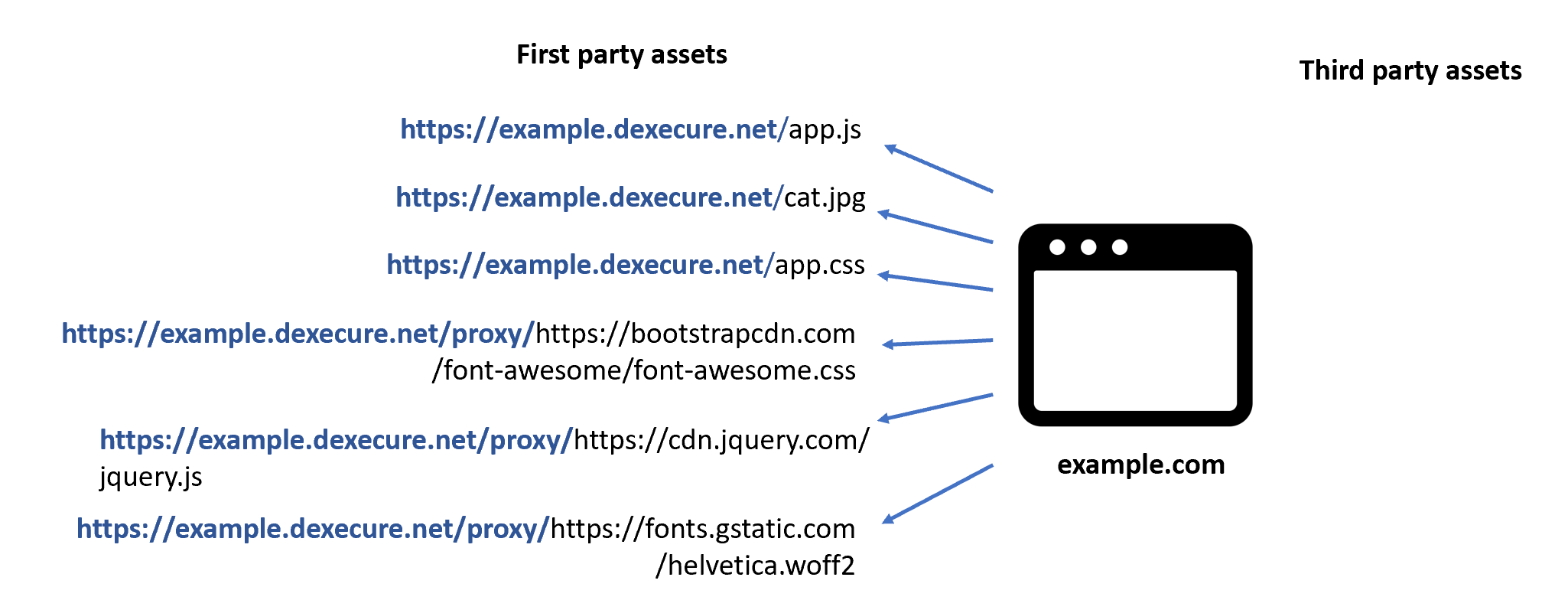

When you signup to Dexecure, you get a domain like example.dexecure.net. And you get all our advanced frontend optimization features by just changing the domain name. For example, to optimize https://example.com/app.js you would just need to change the URL to https://example.dexecure.net/app.js

With the latest Dexecure update, you can elegantly optimize third party assets as well with the same optimization techniques and be more in control of the performance of your third party assets. For example, this is how you can load jQuery from your own Dexecure domain instead of loading it from cdn.jquery.com

https://cdn.jquery.com/jquery.js -> https://example.dexecure.net/proxy/https://cdn.jquery.com/jquery.js

How it works

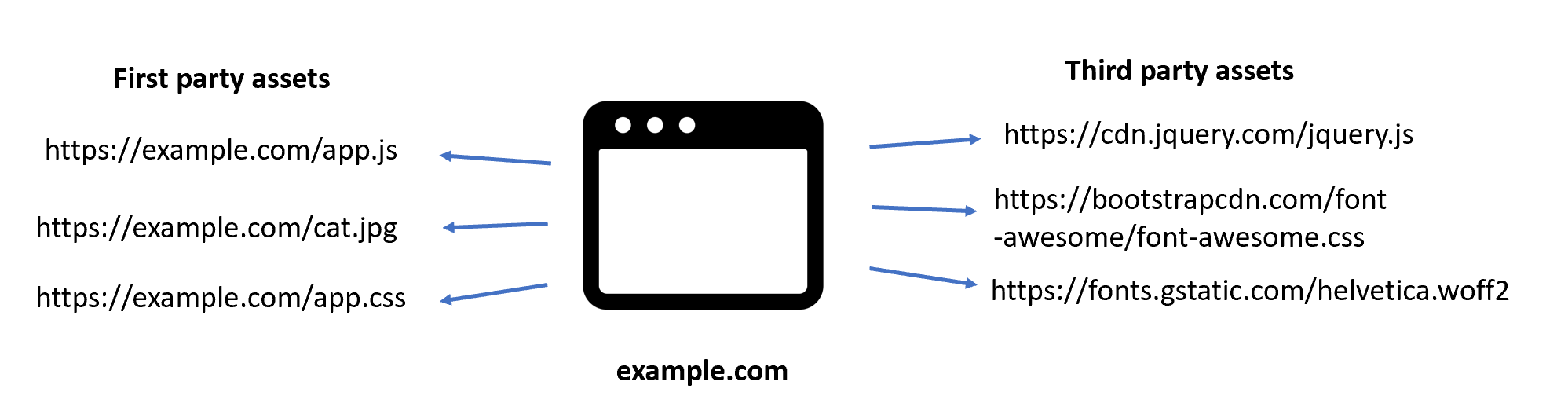

Let’s take a website example.com which loads some assets from their own domain and some assets from third party domains.

With the proxy mode, this is what it would look like.

The first time Dexecure receives a request like https://example.dexecure.net/proxy/https://cdn.jquery.com/jquery.js, we extract the target URL https://cdn.jquery.com/jquery.js, fetch the resource and optimize it using the same optimization techniques we use to optimize the assets coming from your own domain. Once it is optimized, we cache the resource on a Content Delivery Network (CDN). The next time when someone makes a request to the URL, they directly download the optimized resource from the CDN.

Advantages

Fewer round trips: This completely eliminates the need for additional DNS requests, TCP and SSL setup time, etc. because we re-use the same connection that the browser already has to your Dexecure domain.

Faster downloads: By using an already warm connection to download third party assets as well, we circumvent the problem of TCP slow start, leading to faster downloads.

HTTP/2 connection priorities:With HTTP/2, the fewer connections the better. With multiple connections, the congestion control happens independently and each connection is not aware of what’s happening in the other connection. With fewer connections, there would be better congestion control and hopefully better usage of the available bandwidth.

Automatic optimizations of third party assets: You no longer need to worry if the third party libraries you are using are properly being optimized. After we fetch the third party resource, we run it through the same optimization pipeline we use to optimize the resources on your own domain.

Use the best CDN for your use case: When using Dexecure, you also get to choose the best CDN for your traffic profile. We integrate with different CDNs like CloudFlare, CloudFront, Fastly, CDNetworks, or even your own internal Varnish cluster! You aren’t tied to what CDN the third party provider is using.

Why can’t I just download the asset and host it on my own server?

Self hosting is a viable approach for assets which are definitely not going to change, a particular version of jQuery for example. But for scripts which might change with time, it is easier to automatically proxy it with something like Dexecure. Dexecure is configured such that it respects the cache settings that the third party domain sends via their Cache-control header. For example, if the third party serves assets with cache time of 1 day, Dexecure (and the CDN we integrate with) would optimize and cache the asset on the CDN for exactly 1 day. After that, we ping the third party domain again, to re-fetch and re-optimize the asset.

Security considerations

As a security precaution, we whitelist headers we send when making a request to fetch the third party assets. This way, you can be sure that the cookies from your own domain is not being sent to the third party domain.

If you are using a Content Security Policy (CSP) on your website, using this approach can lead to a better CSP policy too. Right now, if you want to load a single resource from cdn.rawgit.com, you would need to whitelist the entire domain in your CSP policy. This makes it easier for the attacker to load another resource from the same domain if there is a Cross Site Scripting vulnerability in your website. But if you are able to self-host the resource or proxy it via Dexecure, you can have a tighter CSP policy. Win!

Use Dexecure to automatically

optimize your website

Use Dexecure to automatically

optimize your website